Statistical Learning Theory and Applications Lecture 1 Introduction Instructor:Quan Wen SCSE@UESTC Fall 2021

Statistical Learning Theory and Applications Lecture 1 Introduction Instructor: Quan Wen SCSE@UESTC Fall 2021

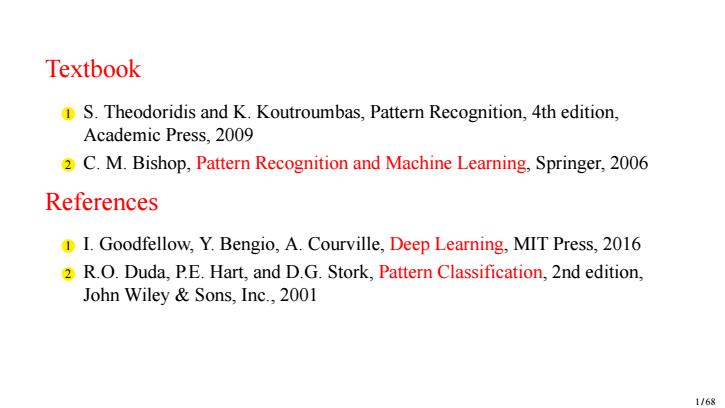

Textbook 1 S.Theodoridis and K.Koutroumbas,Pattern Recognition,4th edition, Academic Press.2009 2 C.M.Bishop,Pattern Recognition and Machine Learning,Springer,2006 References I.Goodfellow,Y.Bengio,A.Courville,Deep Learning,MIT Press,2016 2 R.O.Duda,P.E.Hart,and D.G.Stork,Pattern Classification,2nd edition, John Wiley Sons,Inc.,2001 1/68

Textbook 1 S. Theodoridis and K. Koutroumbas, Pattern Recognition, 4th edition, Academic Press, 2009 2 C. M. Bishop, Pattern Recognition and Machine Learning, Springer, 2006 References 1 I. Goodfellow, Y. Bengio, A. Courville, Deep Learning, MIT Press, 2016 2 R.O. Duda, P.E. Hart, and D.G. Stork, Pattern Classification, 2nd edition, John Wiley & Sons, Inc., 2001 1 / 68

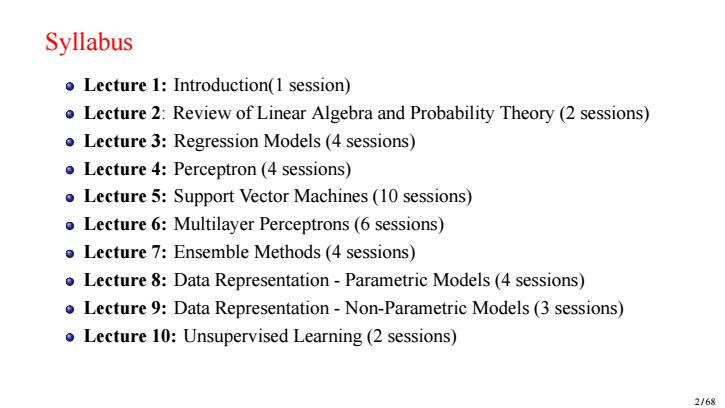

Syllabus Lecture 1:Introduction(1 session) Lecture 2:Review of Linear Algebra and Probability Theory(2 sessions) Lecture 3:Regression Models(4 sessions) Lecture 4:Perceptron(4 sessions) Lecture 5:Support Vector Machines(10 sessions) o Lecture 6:Multilayer Perceptrons(6 sessions) Lecture 7:Ensemble Methods(4 sessions) Lecture 8:Data Representation-Parametric Models(4 sessions) Lecture 9:Data Representation-Non-Parametric Models(3 sessions) o Lecture 10:Unsupervised Learning(2 sessions) 2/68

Syllabus Lecture 1: Introduction(1 session) Lecture 2: Review of Linear Algebra and Probability Theory (2 sessions) Lecture 3: Regression Models (4 sessions) Lecture 4: Perceptron (4 sessions) Lecture 5: Support Vector Machines (10 sessions) Lecture 6: Multilayer Perceptrons (6 sessions) Lecture 7: Ensemble Methods (4 sessions) Lecture 8: Data Representation - Parametric Models (4 sessions) Lecture 9: Data Representation - Non-Parametric Models (3 sessions) Lecture 10: Unsupervised Learning (2 sessions) 2 / 68

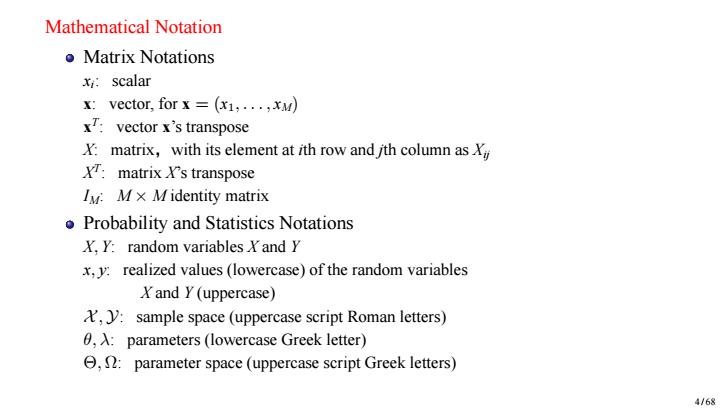

Mathematical Notation o Matrix Notations x:scalar x:vector,for x=(x1,...,xM) xT:vector x's transpose X:matrix,with its element at ith row and jth column asXi XT:matrix X's transpose Iy:Mx Midentity matrix Probability and Statistics Notations X,Y:random variables Y and Y x,y:realized values (lowercase)of the random variables Xand Y(uppercase) sample space(uppercase script Roman letters) 0,A:parameters(lowercase Greek letter) e,:parameter space(uppercase script Greek letters) 4/68

Mathematical Notation Matrix Notations xi : scalar x: vector, for x = (x1, . . . , xM) x T : vector x’s transpose X: matrix,with its element at ith row and jth column as Xij X T : matrix X’s transpose IM: M × M identity matrix Probability and Statistics Notations X, Y: random variables X and Y x, y: realized values (lowercase) of the random variables X and Y (uppercase) X , Y: sample space (uppercase script Roman letters) θ, λ: parameters (lowercase Greek letter) Θ, Ω: parameter space (uppercase script Greek letters) 4 / 68

Outline (Level 1) Definition of Statistical Learning 2 Application Case of Statistical Pattern Recognition 3 SPR System Framework and Design Cycle 4 Basic Concepts 5 Machine Learning in Different AI Disciplines 5/68

Outline (Level 1) 1 Definition of Statistical Learning 2 Application Case of Statistical Pattern Recognition 3 SPR System Framework and Design Cycle 4 Basic Concepts 5 Machine Learning in Different AI Disciplines 5 / 68